Like artificial intelligence itself, the AI startup SambaNova is interesting across the stack. From software to hardware, from technology to business model, and from vision to execution.

SambaNova has made the news for a number of reasons: high-profile founders, a series of funding rounds propelling it into unicorn territory, impressive AI chip technology and unconventional choices in packaging it. The company is now executing on its goal — to enable AI disruption in the enterprise.

SambaNova just announced its GPT-as-a-service offering, its ELEVAITE membership program for customers, and is working with one of the biggest banks in Europe to build what it claims will be Europe’s fastest AI supercomputer.

We connected with SambaNova CEO and co-founder Rodrigo Liang to talk about all that, plus one of our favorite topics: graphs and how they underpin SambaNova’s offering.

AI as a service

SambaNova recently raised a whopping $676M in Series D funding, surpassed $5B in valuation and became the world’s best-funded AI startup. Impressive as this may sound, it probably won’t last very much. The distinction of being “the world’s best-funded AI startup”, that is, not the funding. Liang, who has often referred to AI as “just as big, if not bigger than the internet”, would probably agree:

“People aren’t always aware in their own verticals that there’s an AI race going on. Think about banks, manufacturing, health care, all these different sectors where people are using AI as an opportunity to catapult their position within their sector. It’s the entire industry of AI. There’s a lot of really disruptive things going on, which we play one part of,” Liang said.

SambaNova just unveiled its GPT-as-a-service offering, which tells about how SambaNova approaches AI in the enterprise.

In stark contrast to Nvidia’s offering, for example, SambaNova just wants to do everything for its clients. From getting the model to customizing and training it, and then deploying, operating and maintaining it. That includes accessing the data required to custom-train GPT to client requirements, which Liang said can be done in any way needed — on-premise or in SambaNova’s infrastructure.

This is consistent with the way SambaNova ships its hardware: either as a box that includes everything from chips to networking or as a service. Liang said they have been asked to sell customers “just the chips” many times, and they could do that. But the company claims that the large majority of the world do not have the AI expertise to take chips or software at a low level and implement solutions.

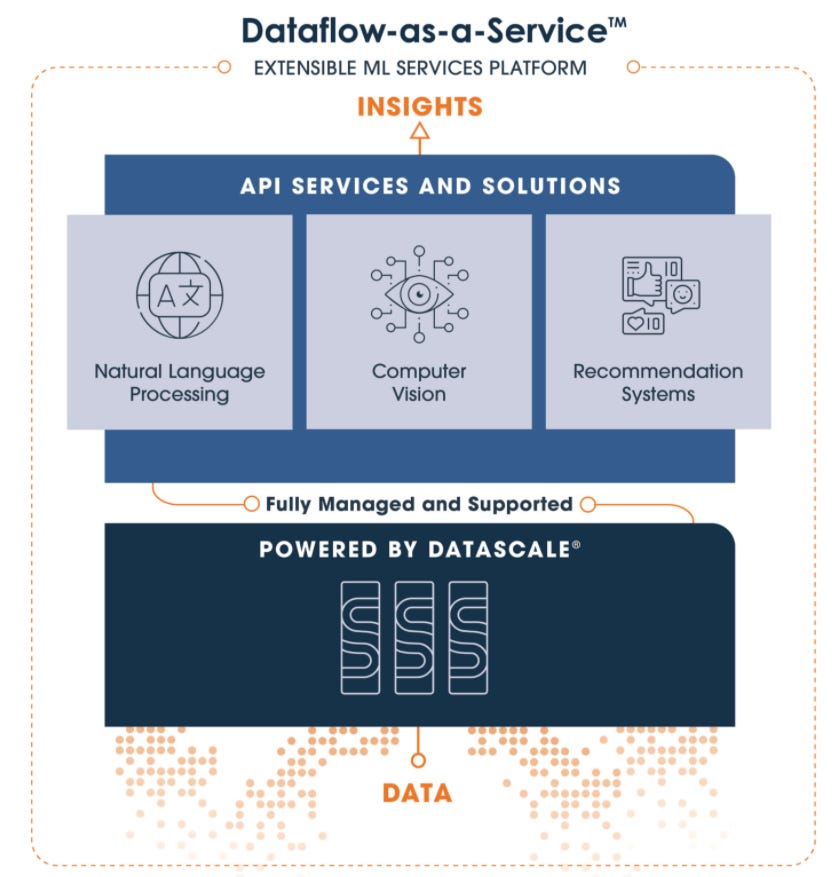

SambaNova has chosen to offer 3 AI model types as a service based on customer demands: language models, computer vision, and recommendation systems.

SambaNova

SambaNova’s focus is on getting as many of the Fortune 5000 (sic) companies to production with AI solutions as possible versus trying to talk to as many AI developers as possible. SambaNova does that, too, and developers love creating new models. Linag’s thesis, however, is that models have gotten to the point that they are “fantastic”, and despite incremental advances, value is all about the deployment in production.

This thesis is consistent not only with SambaNova co-founder Chris Re’s notion of “data-centric AI” but also with the shift of focus towards MLOps. As for the type of AI-powered services that SambaNova offers to its clients, Liang said that although they can be anything, as the dataflow substrate can adapt to any workload, the company has chosen to focus on 3 types of AI models.

GPT language models is one, high-definition computer vision is another one, and recommendation models are the third one. The decision is driven by customer demand. Liang said that although SambaNova’s offering includes customization and maintenance, the business model is subscription-based, not service-based. More Salesforce than Accenture. For the service-heavy parts, SambaNova works with a number of partners.

Dataflow: SambaNova’s edge is based on graph processing

The Dataflow architecture is what gives SambaNova its edge on flexibility and performance, according to Liang. Based on what is publicly available on Dataflow, we had the impression that Dataflow was designed starting from software, and more specifically, compilers. Liang confirmed this and went as far as to characterize SambaNova as “a software-first company”.

So how does Dataflow work? If we think about how neural networks work, we have interconnected nodes doing successive rounds of computation to see if each round’s output yields a better result than the previous one. You just continue to do those iterations over and over again, Liang noted. The computing that happens for that type of processing today is what people call “kernel by kernel”, he went on to add.

That, Liang notes, introduces inefficiency and increases the need for high bandwidth memory because there are many handshakes between the computational engine and an intermediate memory:

“As a computational engine, you did your computation, and then you send it back, and you let the host send you the next computational kernel, and then you start figuring out, oh, what do I need? The previous data was stored here; then I’ll get it. So it’s very hard to plan resources. We don’t know what’s coming. When you don’t know what’s coming, you don’t know what all the resources you might need are.

There’s a lot of really disruptive things going on in AI, and SambaNova is a part of that.

By sdecoret — Shutterstock

We started with the compiler stack. The first thing we want to do is say, look, these neural nets are very predictable. Even for something like GPT, as big as it is, we know the interconnections way in advance. Models are getting so big that the human eye and mind were not made to optimize for it. But compilers do a great job of that.

Suppose you allow the tool to come in and unroll the whole graph and just see every layer of the graph, every interconnection that you might need, where the section cuts are, where all the critical latency interconnections are, where the high bandwidth connections are. In that case, you actually have a chance of figuring out how to really optimally run this particular graph,” said Liang.

Liang went on to add the options available today — CPUs, GPUs, FPGAs — only know how to process one kernel at a time. SambaNova takes the computation graph, all bandwidth and latency problems, maps it, and keeps the data on the chip. Keeping all of these graphs and interconnections optimally tied together and making all the orchestration way in advance is key.

You can scale that for many graphs on one chip, or you can put one graph in hundreds of chips — the compiler doesn’t care. For example, some of SambaNova’s most sophisticated customers — in the US government — report that they’re getting 8X to 10X, sometimes 20X advantage compared to their GPU results that they’ve optimized for years, Liang said.

Interestingly, the last couple of times we saw results for MLPerf, SambaNova was not included. To clarify, that means SambaNova did not submit to MLPerf at all. The MLPerf test suite is the creation of the MLCommons, an industry consortium that issues benchmark evaluations for machine learning training and inference workloads. So the only way to verify Liang’s claims it to try SambaNova out, apparently. Benchmarks should be taken with a pinch of salt anyway, and the proof is in how things work in your own setting.

Regardless, we find the emphasis on graph processing for AI chips intriguing. SambaNova is not the only AI chip company to focus on that actually, and the race for graph processing is on.